Ubuntu 24 LTS - Unifi Mongo Upgrades

For the longest time unifi has only supported mongodb 3.6 or older (or mongodb 4.4 for some newer versions (7.5-8.0)), with the release of 8.1.x this has now been updated to version 7.0, still old, but supported, good times.

However, the install on 7 is less that easy, and if you’re on an older server (or just upgraded to 24.04 LTS), then there are a number of steps… Hold on tight here we go…

First step, backup unifi, snapshot the server, make a brew, pray to the IT gods…

Next, we need to go from 3.6 to 4.4, luckily the ever great GlennR has a script for this. Go ahead and run his unifi upgrade script selecting the mongodb upgrade option. For some reason I couldn’t get the script to go any further than 4.4…

The script can be found here:

https://get.glennr.nl/unifi/update/unifi-update.sh

Next things get a bit wild, so I’ve adapted someone elses script (https://techblog.nexxwave.eu/update-mongodb-4-4-to-7-0-on-unifi-servers/). I’d save the below into a file and make it executable and run it.

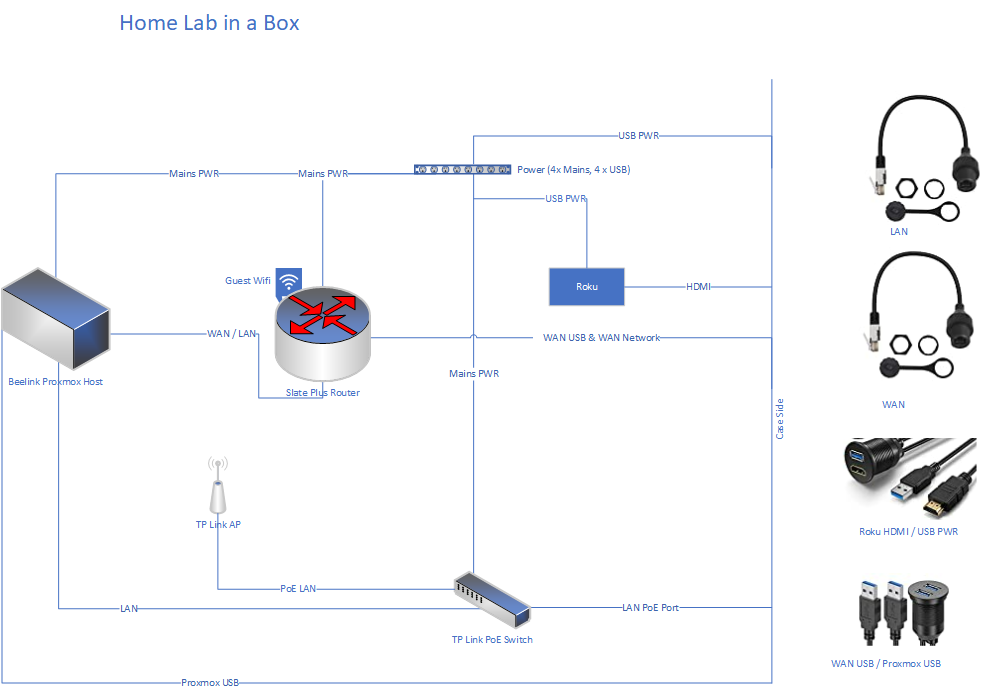

** Proxmox Users: You may need to update or change your CPU type the default KVM64 doesn’t expose the AVX flag, so you can either update your cpu.conf file (below) or change the CPU type to host, your CPU must support AVX

/etc/pve/virtual-guest/cpu-models.conf.

cpu-model: avx

flags +avx;+avx2;+xsave

phys-bits host

hidden 0

hv-vendor-id proxmox

reported-model kvm64

Upgrade Script:

#!/bin/bash

# Upgrade MongoDB from 4.4 to 6.0

# Author: Nexxwave https://www.nexxwave.be

# MongoDB releases archive: https://www.mongodb.com/download-center/community/releases/archive

# MongoDB versions: https://www.mongodb.com/docs/manual/release-notes/

# Adapted for Ubuntu 22/24 by Michael Sage 28/8/2024

###

# Stop UniFi

###

echo "## Stopping UniFi"

systemctl stop unifi

###

# Download MongoDB Shell

# 'mongo' is deprecated since Mongo 6.0, so we need a separate pacakge.

###

echo "## Download MongoDB Shell"

wget https://downloads.mongodb.com/compass/mongosh-2.2.5-linux-x64.tgz -P /tmp/

tar xvzf /tmp/mongosh-2.2.5-linux-x64.tgz -C /tmp/

###

# Upgrade to MongoDB 5.0.26

###

echo "## Downloading and extracting MongoDB 5.0.26"

wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-ubuntu2004-5.0.26.tgz -P /tmp/

tar xvzf /tmp/mongodb-linux-x86_64-ubuntu2004-5.0.26.tgz -C /tmp/

echo "## Starting MongoDB 5.0.26 in background"

sudo -u unifi /tmp/mongodb-linux-x86_64-ubuntu2004-5.0.26/bin/./mongod --port 27117 --dbpath /var/lib/unifi/db &

sleep 60

echo "## Executing feature compatibility version 5.0"

/tmp/mongosh-2.2.5-linux-x64/bin/./mongosh --port 27117 --eval 'db.adminCommand( { setFeatureCompatibilityVersion: "5.0" } ) '

echo "## Shutting down MongoDB 5.0.26"

/tmp/mongosh-2.2.5-linux-x64/bin/./mongosh --port 27117 --eval 'db.getSiblingDB("admin").shutdownServer({ "timeoutSecs": 60 })'

sleep 10

###

# Upgrade to MongoDB 6.0.15

###

echo "## Downloading and extracting MongoDB 6.0.15"

wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-ubuntu2004-6.0.15.tgz -P /tmp/

tar xvzf /tmp/mongodb-linux-x86_64-ubuntu2004-6.0.15.tgz -C /tmp/

echo "## Starting MongoDB 6.0.15 in background"

sudo -u unifi /tmp/mongodb-linux-x86_64-ubuntu2004-6.0.15/bin/./mongod --port 27117 --dbpath /var/lib/unifi/db &

sleep 60

echo "## Executing feature compatibility version 6.0"

/tmp/mongosh-2.2.5-linux-x64/bin/./mongosh --port 27117 --eval 'db.adminCommand( { setFeatureCompatibilityVersion: "6.0" } )'

echo "## Shutting down MongoDB 6.0.15"

/tmp/mongosh-2.2.5-linux-x64/bin/./mongosh --port 27117 --eval 'db.getSiblingDB("admin").shutdownServer({ "timeoutSecs": 60 })'

sleep 10

echo "## All done"

Then we move on to upgrading to version 7, nearly there!

curl -fsSL https://pgp.mongodb.com/server-7.0.asc | sudo gpg -o /usr/share/keyrings/mongodb-server-7.0.gpg --dearmor

echo "deb [ arch=amd64,arm64 signed-by=/usr/share/keyrings/mongodb-server-7.0.gpg ] https://repo.mongodb.org/apt/ubuntu jammy/mongodb-org/7.0 multiverse" | sudo tee -a /etc/apt/sources.list.d/mongodb-org-7.0.list

apt update

sudo apt install mongodb-org mongodb-org-mongos mongodb-org-server mongodb-org-shell mongodb-org-tools mongodb-org-database-tools-extra

Now you can start unifi again

sudo systemctl start unifi

Finally finish the update with:

mongosh --port 27117 --eval 'db.adminCommand( { setFeatureCompatibilityVersion: "7.0", confirm: true } )'

Check if everything went ok

mongosh --port 27117 --eval 'db.adminCommand( { getParameter: 1, featureCompatibilityVersion: 1 } )'

The output should look something like this:

{ featureCompatibilityVersion: { version: '7.0' }, ok: 1 }